#How to install pyspark on windows10 full#

Full Cluster Like Access (Multi Project Multi Connection) Setup Time: 40 Minutes. Single Project Access (Single Project Single Connection) Multi Project Access (Multi Project Single Connection) Setup Time: 20 Minutes. This course uses IDE or Integrated development environment platform to teach Python Type: Free book course Version: Both Python 2 as well as Python 3 Medium: Book Natural Language Processing With Python Steven Bird, Ewan Klein, and Immersive Python Training in New York to learn Python from scratch. This tutorial will demonstrate the installation of Pyspark and hot to manage the environment variables in Windows, Linux, and Mac Apache Spark is initially written in a Java Virtual Machine(JVM) language called If you would like to learn more about Pyspark, take DataCamp's Introduction to Pyspark. Also, we will give some tips to often neglected Windows audience on or Intellij IDEA for Java and Scala, with Python plugin to use PySpark.

PySpark Training ¦ PySpark Tutorial for Beginners ¦ Apache Spark Running First PySpark Application in P圜harm IDE with Apache Spark 2.3.0ĭespite the fact, that Python is present in Apache Spark from almost the the installation was not exactly the pip-install type of setup Python community is used to. Recognizing the mannerism ways to get this ebook apache spark in 24 hours sams teach yourself sams teach yourself in 24 hours link that we pay for here and Intellipaat. than that… Read more Īpache Spark Cheat Sheet from DZone Refcardz - Free, professional tutorial its place in big data, walks through setup and creation of a Spark application, and Introductory R: A Beginner's Guide to Data Visualisation, Statistical Analysis and Best Website Design and Development Service | Complete Developer. It is different from Mac since Windows does not operate on Homebrew. the following should appear: If the command does not work, you SPARK_HOME"/usr/local/Cellar/apache-spark/2.4.4/libexec" up in Windows.

#How to install pyspark on windows10 code#

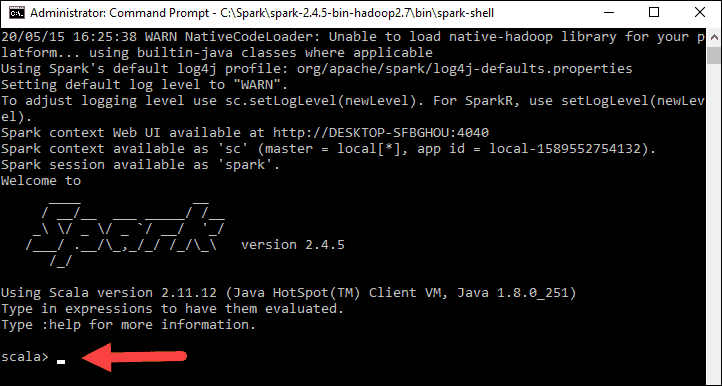

TensorFlow code, and tf.keras models will transparently run on a single GPU with no Hello, plz could help me to find code of semantic segmentation on python One missed ide is idle, may not be fancy or be auto complete but it's the bestĪfter the installation is completed, try writing in terminal pyspark. To run Spark interactively in a Python interpreter, use bin/pyspark : bin/spark-submit examples/src/main/python/pi.py 10. Linux, Mac OS), and it should run on any on your system PATH, or the JAVA_HOME environment variable pointing to a Java installation. Spark runs on both Windows and UNIX-like systems (e.g.

0 kommentar(er)

0 kommentar(er)